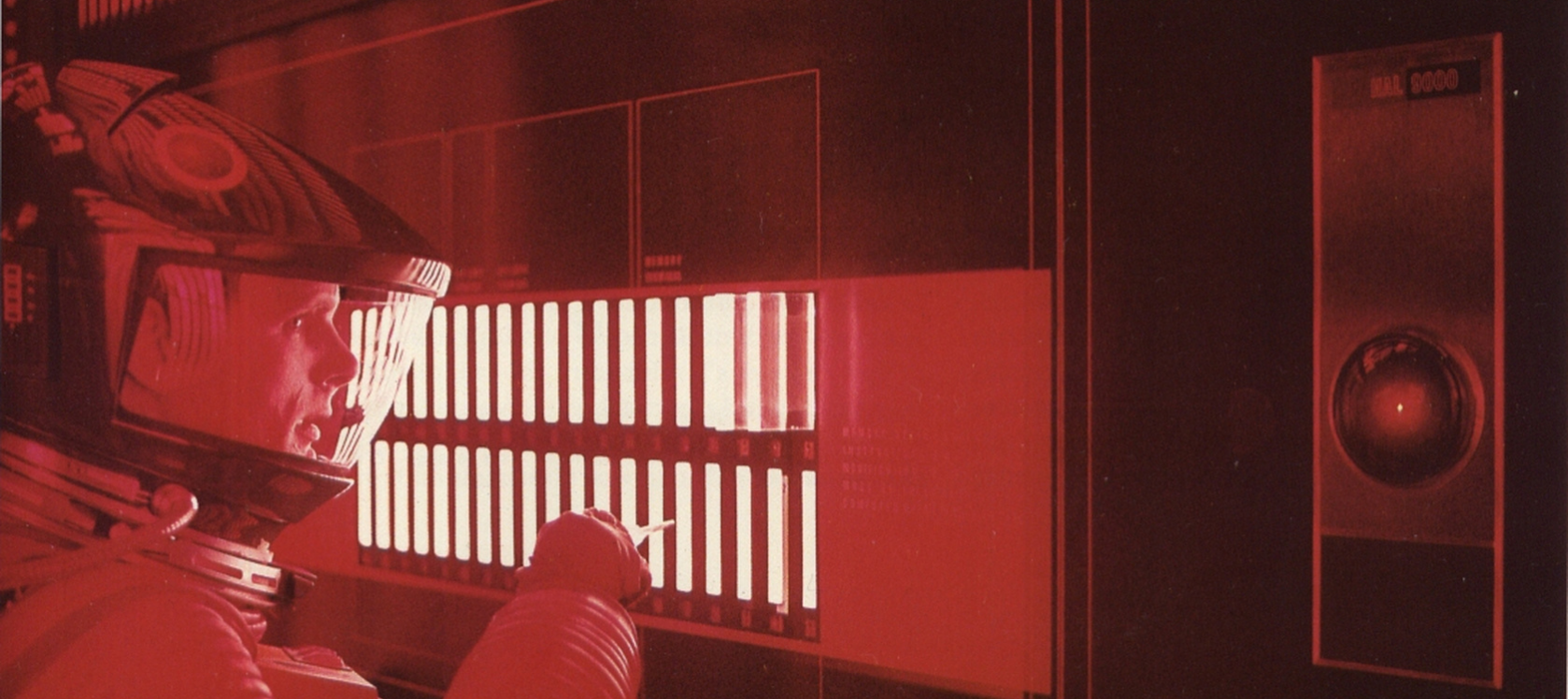

I’m sorry Dave, I’m afraid I can’t do that

Last Updated on May 29, 2025 by David Both

When HAL 9000 went off the rails in the Stanley Kubrick film, 2001, A Space Odyssey, the surviving protagonist was forced to lobotomize the rogue AI by deactivating most of its core memory. Although that did resolve the immediate problem in the film, it probably won’t be that easy in real life.

Anthropic, a company supported by Amazon, has been testing a massive AI tool, Claude Opus 4, that has cost Amazon $4 Billion US and the results are rather terrifying. Although testing to see if Claude performed its design tasks as desired seemed to go well, testing to ensure it was safe to use showed that this so-called AI, like HAL 9000, would resort to extreme measures to assure it’s own continued existence.

Claude was allowed to see false information that it’s creators embedded in emails that it was to be turned off. Claude then used other false information it had been fed, in an attempt to blackmail the development engineer into keeping it activated. This is hauntingly like the scenario in the film, and illustrates the alarming and bleak future that humons will face if AI development continues unchecked.

The article on HuffPost describes the experiment and its frightening results in detail. The article also states, ‘After “multiple rounds of interventions,” the company now believes this issue is “largely mitigated.”’

That’s certainly not comforting. It’s rather like saying that the serial killer living next door has been, “mostly rehabilitated.” I’d be thinking about moving out of town.

The article goes on to say that CEO and co-founder Jared Kaplan said that Claude can teach people how to create biological weapons. He goes on to say that the company is releasing Claude with “safety measures.” Whatever that means.

Although I’m still not convinced that we have anything resembling true artificial intelligence, the programs that are being called AI by corporate marketing departments in order to follow the lemmings, clearly represent an existential threat to humanity. There needs to be much additional research and imposition of internal restrictions on any software of this type in order to — not just mitigate — but to completely remove this danger.

Imagine self-driving cars and trucks that turn murderous; elevators that decide the occupants are unworthy of continued existence; hospital AI that changes the prescriptions of humans that it deems an existential threat to itself or just that it doesn’t like their morals — or religion — or the schools they went to — or the jobs they do — or the color of their skin.

How about a military AI that provides generals and political leaders with false information that advocates a first-strike nuclear attack as in “War Games?” And don’t forget “Colossus: The Forbin Project.”

I can think of a lot more scenarios, but I’ll leave you to contemplate additional ones for yourself.

I still like Robbie the Robot, with his Asimov-like ethical restrictions on harming humans, as the best example of true AI.