Programming Bash #5: Automation with Scripts

Last Updated on December 29, 2023 by David Both

For sysadmins, those of us who run and manage Linux computers most closely, we have direct access to the tools that can help us work more efficiently. We should use those tools to maximum benefit. So in this article and the next two in this series we explore using automation in the form of Bash shell scripts to make our own lives as sysadmins easier.

Author’s note: Some of the content for these articles is extracted from Volume 2, Chapter 29 of my three-part Linux self study course, Using and Administering Linux – Zero to SysAdmin, 2nd Edition, Apress, 2023. Parts have been changed significantly to better fit this article format.

Why I use shell scripts

In Chapter 9 of my book, “The Linux Philosophy for sysadmins,” I state:

“A SysAdmin is most productive when thinking – thinking about how to solve existing problems and about how to avoid future problems; thinking about how to monitor Linux computers in order to find clues that anticipate and foreshadow those future problems; thinking about how to make [their] job more efficient; thinking about how to automate all of those tasks that need to be performed whether every day or once a year.

“sysadmins are next most productive when creating the shell programs that automate the solutions that they have conceived while appearing to be unproductive. The more automation we have in place the more time we have available to fix real problems when they occur and to contemplate how to automate even more than we already have.”

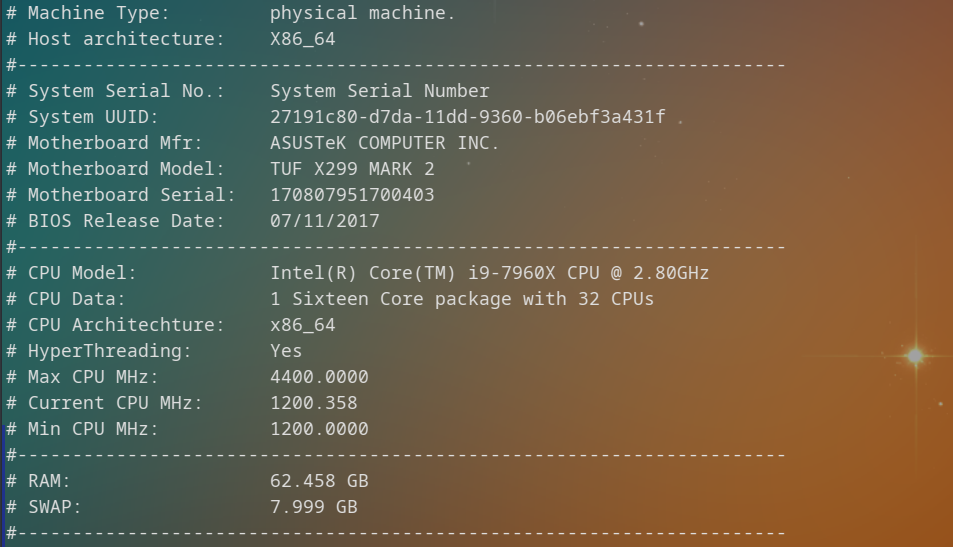

In this article we explore why shell scripts are an important tool for the sysadmin and the basics of creating a very simple Bash script. The header image for this article shows part of the data stream created by one of my scripts.

Why automate?

Have you ever performed a long and complex task at the command line thinking, “Glad that’s done – I never have to worry about it again.”? I have – very frequently. I ultimately figured out that almost everything that I ever need to do on a computer, whether mine, one that belongs to an employer, or a consulting customer, will need to be done again sometime in the future.

Of course I always think that I will remember how I did the task in question. But the next time I need to do it is far enough out into the future that I sometimes even forget that I have ever done it at all, let alone how to do it. For some tasks I started writing down the steps required on a bit of paper. I thought, “How stupid of me!” So I then transferred those scribbles to a simple note pad type application on my computer. Suddenly one day I thought again, “How stupid of me!” If I am going to store this data on my computer, I might as well create a shell script and store it in a standard location, /usr/local/bin, so that I can just type the name of the shell program and it does all of the tasks I used to do manually.

For me automation also means that I don’t have to remember or recreate the details of how I performed that task in order to do it again. It takes time to remember how to do things and time to type in all of the commands. This can become a significant time sink for tasks that require typing large numbers of long commands. Automating tasks by creating shell scripts reduces the typing necessary to perform my routine tasks.

Shell scripts

Writing shell programs – also known as scripts – provides the best strategy for leveraging my time. Once having written a shell program it can be rerun as many times as needed. I can update my shell scripts as needed to compensate for changes from one release of Linux to the next. Other factors that might require making these changes are the installation of new hardware and software, changes in what I want or need to accomplish with the script, adding new functions, removing functions that are no longer needed, and fixing the not-so-rare bugs in my scripts. These kinds of changes are just part of the maintenance cycle for any type of code.

Every task performed via the keyboard in a terminal session by entering and executing shell commands can and should be automated. sysadmins should automate everything we are asked to do or that we decide on our own needs to be done. Many times I have found that doing the automation up front saves time the first time.

One bash script can contain anywhere from a few commands to many thousands. In fact, I have written bash scripts that have only one or two commands in them. Another script I have written contains over 2,700 lines, more than half of which are comments.

Getting started

Let’s look at a trivial example of a shell script and how to create it. In my article on Bash command line programming, I used the example found in every book on programming I have ever read, “Hello world.” From the command line that looks like this. Make ~/testdir6 the PWD for this article.

[dboth@testvm1 testdir6]$ echo "Hello world"

Hello worldBy definition, a program or shell script is a sequence of instructions for the computer to execute. But having to type them into the command line every time gets quite tedious, especially when the programs get to be long and complex. Storing them in a file that can be executed whenever needed by entering a single command saves time and reduces the possibility for errors to creep in.

You can try these examples along with me but I suggest doing so as a non-root user on a test system or VM. You can also use a test directory. If you have been following this series from the beginning, testdir6 is a good choice or you can create your own. Although our examples will be harmless, mistakes do happen and being safe is always preferable.

Our first task is to create a file that we can use to contain our program. Use the touch command to create the empty file, hello.sh, and then make it executable. I use the .sh filename extension to indicate that this is a shell script. The extension is irrelevant to whether the program is executable or not. The file mode of 774 tells us humans and Linux that this is an executable file and we need to set that as well.

[dboth@testvm1 testdir6]$ touch hello.sh

[dboth@testvm1 testdir6]$ chmod 774 hello.sh

Now use your favorite editor to add the following line to the file.

echo “Hello world”

Save the file and run it from the command line. You can use a separate shell session to execute the scripts we create in this article.

[dboth@testvm1 testdir6]$ ./hello

Hello world!This is the simplest Bash program most of us will ever create – a single statement in a file. For our purposes we will build our complete shell script round this simple Bash statement. The actual function of the program is irrelevant for our purposes so this simple statement allows us to build a program structure – a template for other programs – without needing to be concerned about the logic of an ultimate functional purpose. Thus we can concentrate on basic program structure and creating our template in a very simple way. We can create and test the template itself rather than a complex functional program.

Sha-bang

Our single statement works fine so long as we use Bash or a shell compatible with the commands we use in the script. If no shell is specified in the script, the default shell is used to execute the script commands. Most Bash commands run in the Korn shell (ksh) but there will be some incompatibilities with other shells that are less like Bash.

Our next task is to ensure that the script will run using the Bash shell even if another shell is the default. This is accomplished with the she-bang line. Sha-bang is the geeky way to describe the #! characters that are used to explicitly specify which shell is to be used when running the script. In this case we specify Bash, but it could be any other shell. If the specified shell is not installed, the script will not run.

Add the sha-bang line as the first line of the script so that it now looks like this.

#!/usr/bin/bash

echo "Hello world!"Run the script again but you should see no difference in the result. If you have other shells installed such as ksh, csh, tcsh, zsh or some other, start one and run the script again.

Scripts vs compiled programs

When writing programs to automate – well, everything – sysadmins should always use shell scripts. Because shell scripts are stored in ASCII text format, they can be easily viewed and modified by humans just as easily as they can by computers. You can examine a shell program and see exactly what it does and whether there are any obvious errors in the syntax or logic. This is a powerful example of what it means to be open.

I know some developers tend to consider shell scripts something less than true programming. This marginalization of shell scripts and those who write them seems to be predicated on the idea that the only true programming language is one that must be compiled from source code to produce executable code. I can tell you from experience this is categorically untrue.

I have used many languages including BASIC, C, C++, Pascal, Perl, Tcl/Expect, REXX and some of its variations including Object REXX, many shell languages including Korn, csh and Bash, and even some assembly language. Every computer language ever devised has had one purpose – to allow humans to tell computers what to do. When you write a program, regardless of the language you choose, you are giving the computer instructions to perform specific tasks in a specific sequence.

Scripts can be written and tested far more quickly than compiled languages. Programs usually must be written quickly to meet time constraints imposed by circumstances or the PHB. Most of the scripts we write are to fix a problem, to clean up the aftermath of a problem, or to deliver a program that must be operational long before a compiled program could be written and tested.

Writing a program quickly requires shell programming because it allows quick response to the needs of the customer whether that be ourselves or someone else. If there are problems with the logic or bugs in the code they can be corrected and retested almost immediately. If the original set of requirements was flawed or incomplete, shell scripts can be altered very quickly to meet the new requirements. So, in general, we can say that the need for speed of development in the SysAdmin’s job overrides the need to make the program run as fast as possible or to use as little as possible in the way of system resources like RAM.

Most things we do as sysadmins take longer to figure out how to do than they do to execute. Thus, it might seem counter-productive to create shell scripts for everything we do. Writing the scripts and making them into tools that produce reproducible results and which can be used as many times as necessary takes some time. The time savings come every time we can run the script without having to figure out again how to perform the task.

Final thoughts

We have not really gotten very far with creating a shell script in this article but we have created a very small one. We have also explored the reasons for creating shell scripts and why they are the most efficient option for the system administrator, rather than compiled programs.

Next time we will begin creation of a Bash script template that can be used as a starting point for other Bash scripts. Our template will ultimately contain a help facility, a GNU licensing statement, a number of simple functions, and some logic to deal with those options as well as others that might be needed for the scripts that will be based on this template.

Series Articles

This list contains links to all eight articles in this series about Bash.

- Programming Bash #1 – Introducing a New Series

- Programming Bash #2: Getting Started

- Programming Bash #3: Logical Operators

- Programming Bash #4: Using Loops

- Programming Bash #5: Automation with Scripts

- Programming Bash #6: Creating a template

- Programming Bash #7: Bash Program Needs Help

- Programming Bash #8: Initialization and sanity testing